Robotic weapons that can autonomously identify the enemy, place firepower in position, and assist in targeting are almost a reality.[1] War waged by intelligent machines conducted by humans ensconced in bunkers or roaming from stand-off command centers at sea or in air can alter the face of the battlefield. Such wars can change the very definition of conflict.

Will the future autonomous fighting systems be a boon or an ultimate threat to humanity?

Change will start with the possibility that vulnerabilities in enemy configuration may be better and faster diagnosed with machine learning algorithms, which can also more accurately decide if the enemy is where we expect it to be and in what strength. Open flanks and weak points can be dynamically discovered using models that predict movement in time and space. Finally, optimal moments of attack or withdrawal can be determined using machine learning, a method that based on past experience can identify what to do next. This second revolution in military affairs will not stop here, though. There is the clear possibility that the collection of data analytic tools will make the next and necessary step: full autonomy.

Will future autonomous fighting systems be a boon or an ultimate threat to humanity? I argue that while real, the danger is not that the machines will rebel, but that they will be too obedient to their programmers, destroying each other and their creators. To avoid the possibility of Mutual Assured Destruction re-enacted by robotic warfare, it is in the interest of all combatants to program their war machines to follow the Golden Rule, instilling in them the fear of their own annihilation. This might make the war robots less precise but being more discriminating will make it easier to blend with human decision making. This solution is necessary philosophically and from a military doctrine perspective. The full technical implementation – which will take decades to solve – is another problem that is not the main point of this article. I do propose, however, that a way to do it is to use N-decision making routines, which I briefly discuss at the article’s end.

By some definitions, the systems of debate could be called Artificially Intelligent war machines.[2] Their emergence has been met with two reactions. On the one hand, some fear that military AI machines will turn rogue. What if an AI agent decides to launch an attack based on false assumptions, harming civilians or its own troops? Fearing this, over 3000 scientists, scholars, and technologists, including Elon Musk and the creators of Google Mind, signed a pledge not to develop “killer robots.”[3]

In effect, technology will finally achieve what the Geneva conventions could not do: minimize the impact of violence on civilians and treat prisoners humanely.

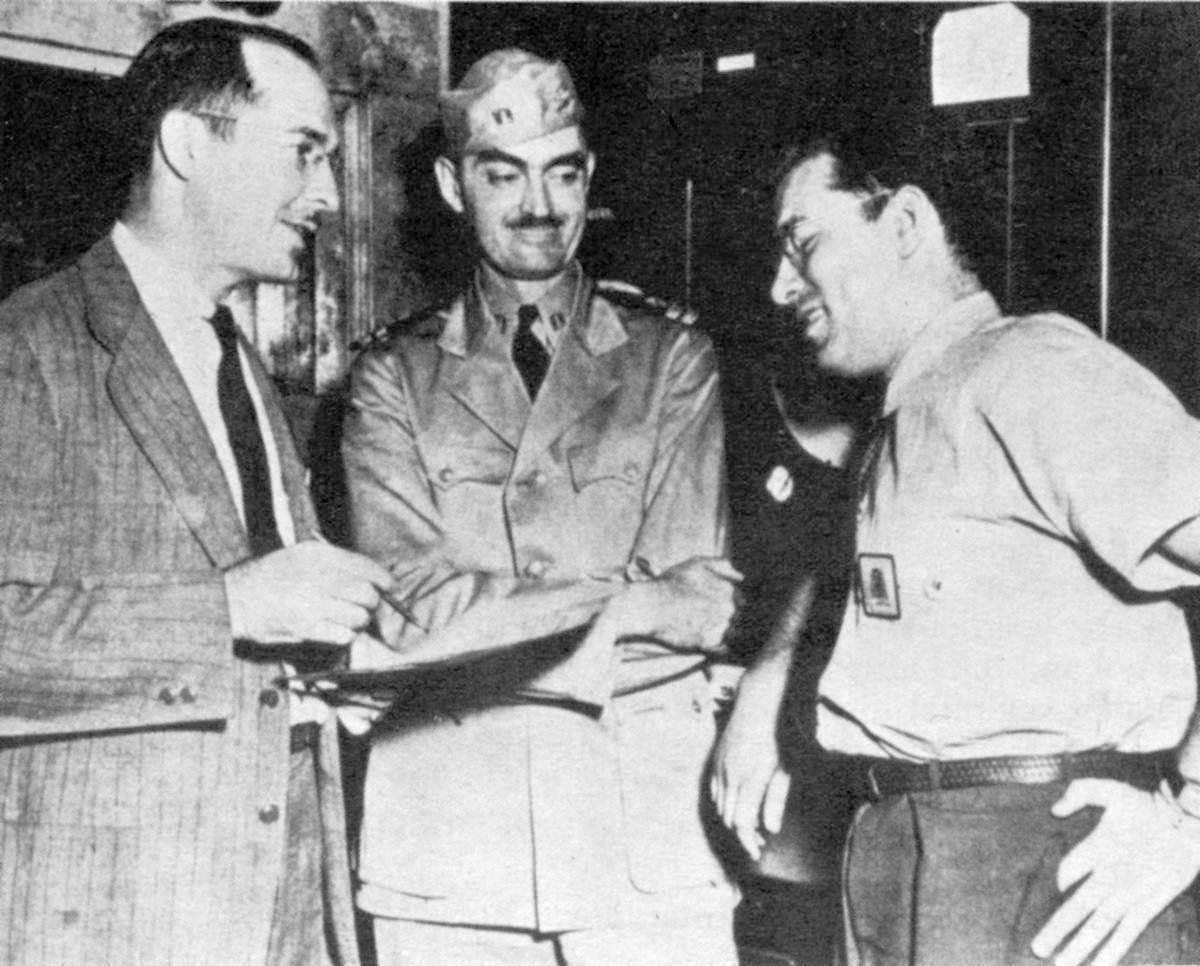

Robert Heinlein, L. Sprague de Camp and Isaac Asimov, at the Philadelphia Navy Yard in 1944. Image courtesy of Wikimedia.

On the other hand, some hope that military AI agents can be designed from the ground up to behave ethically. Some imagine, in a manner similar to the famous “rules of robotics” formulated by Isaac Asimov, that AI will be hardwired to always defend and protect human lives, even at the expense of military goals.[4] According to this scenario, only necessary force will be used and only against those for whom it was intended. This raises, for some, the expectation that the emergence of AI will make war more, not less, rule-bound. In effect, technology will finally achieve what the Geneva conventions could not do: minimize the impact of violence on civilians and treat prisoners humanely.[5]

Both lines of thinking remain, for now, on the drawing board. Realistically, the emergence of SkyNet, the Hollywood-invented human-hunting, self-aware, matrix-like AI, is at least three to four decades away.[6] Current AI technologies remain a collection of statistical predictive mechanisms that improve their performance by iterative feeding on new observations, which leads to increasingly complex mechanisms wherein known characteristics are matched with unclassified observations. At each turn, the predictive power of the known characteristics’ matching with new data improves. The predictive machinery is intelligent and it learns in the sense that it adapts by adjusting its parameters. This, however, is nothing new; it is a mere feedback mechanism.

There are, however, perspectives for creating future “artificial intelligence” agents that may be able to work down from abstract premises, premises that can move from feedback to feedforward logics. One may define, for example, the concept of enemy, truck, and attack propositionally, providing intrinsic characteristics, contextual definitions, and observational correlatives for each. Enemy could be defined, for example, as an exhaustive list of propositional definitions following a pattern such as: “organized human and technology force that carried past military operations.” Defining truck would be simpler, combining technical characteristics and a comprehensive collection of images and 3D models. Observational data could refer to travel patterns of specific entities that fit the descriptions provided by the definitions for enemy and truck. AI now has the role and the ability to decide if a truck belongs to the enemy, if it is in an offensive or defensive stance, what type of attack is it going to launch, if it is in attack mode, and finally whether it should open fire, taking into account costs and benefits that might lead to a variety of scenarios. Machine learning algorithms cannot do this, yet. Future AIs must learn how to assemble all inputs into a generalizable decision-making matrix that account not only for observations but also deeper knowledge of the qualities and intentions of the friend or foe. If we are to use Rumsfeld’s famous quip about “known knowns,” “known unknowns,” and “unknown unknowns,” military artificial intelligence currently explores “known unknowns.”[7] True AIs deal with “unknown unknowns.”[8]

Although AI technology is quite far from making inferential leaps by which it can analyze its own assumptions, goals, and domains of application – or to change them from the ground up – let us imagine this vision might become reality sooner (20-50 years) rather than later. The question that emerges is: are such military AI agents a net-benefit for military science in particular and for humanity in general? The answer involves a difficult trade-off between precision and resource-consuming operations. Advanced military AI may be sufficiently reasoned to intensify warfare because war-making becomes more precise and the targeting more selective.[9] From a system theory perspective, these AI agents are near-deterministic, in the sense that once their pattern recognition and fire accuracy are perfected, systems theory can expect near-deterministic effects.[10] This is similar to macro-physical systems, in which the mass, speed, and position of any object can determine its future position.

…near-deterministic precision comes with near-perfect efficiency. In AI-driven warfare, less firepower is needed to destroy a given unit of enemy strength.

Deterministic systems have intrinsic advantages and disadvantages. On the positive side, the more asymmetric the relationship between attacker and attacked, the higher the accuracy, since attackers can choose the time and context of the attack without interference. Higher accuracy also leads to less collateral damage. Robotic warfare might end up harming both fewer civilians and the attacking troops in specific instances. However, the more symmetric warfare becomes, the more balanced the force ratio and robotic capabilities, the more devastating a deterministic system becomes. As the battle flow becomes more precise, resulting in carefully calculated engagements, the deterministic logic of each automated actor increases the intensity of the conflict by ceaseless propagation and unfailing achievement of the set goal: destroy the enemy at all costs. Mutual reduction of forces becomes almost guaranteed. More precisely, when evenly matched forces continue battling each other, the last two machines will mutually destroy each other or only one of them will be left standing, half-burned on the battlefield.

I, ROBOT by Isaac Asimov. Gnome Press, 1950. Cover art by Edd Cartier.

Let us unpack this statement with a thought experiment, starting from the axiom that near-deterministic precision comes with near-perfect efficiency. In AI-driven warfare, less firepower is needed to destroy a given unit of enemy strength. Imagine a peer conflict involving even a medium-size military conflict on a par with the 1967 Arab-Israeli War or Operation Iraqi Freedom. In such a scenario, hundreds or thousands of near-deterministic fire platforms – naval, air, and land-based – will pour vast amounts of destruction on each other’s troops and weapons systems quickly, precisely, and remorselessly, destroying in a predictable cascade entire divisions, naval battle groups, or air armies. Such destruction will work near-deterministically, meaning that, except for the unavoidable accidents, each weapon system will shoot with near-perfect precision at the target, which will ensure the highest destructive power. Furthermore, the damage inflicted on the opponent will be calculated in such a way as to “pay off” one’s own losses, even if they are devastating. In other words, if the opposing force is annihilated, one’s losses could come as close as necessary to major losses, too.

How will this scenario work operationally? First, the logic of war demands recognizing and acting on information about the enemy’s intentions. Current AI technologies are very good and will become even better at recognizing signals in the noise, therefore increasing the reaction and destructive speed of weapons and armies.[11] Second, wars tend to be zero-sum games, as they have winners and losers, at least in a military sense. As Clausewitz writes, “war is an act of force, and there is no logical limit to the application of that force.”[12] When allowing and including negotiation, persuasion, or agreement, these activities are always concluded, so-to-speak, at gunpoint. Furthermore, the desired end result is rarely a compromise, unlike conversations and negotiations unattended by force; a clean win-lose result is preferred.

The battle of Gettysburg was decisive because of, not in spite of, the staggering number of combatants who died or were incapacitated over three days of battle – between 45,000 to 50,000.

The machine learning/AI-driven wars of our thought experiment will not diverge, but rather harden the axiom that rules all wars: apply force liberally, unreservedly, and for long periods of time.[13] As force is used more deliberately, trade-offs – a characteristic of all wars – will become normalized and destruction on a greater scale acceptable. Machine learning algorithms that predict certain outcomes for certain human and material costs will never flinch. If what is asked of machines is to deliver a certain result, then they will calculate and provide the outcome promptly. Neither will commanders using AIs recoil from making tough decisions. After all, for the first time they will know what the bloodshed bought them.

Balancing huge costs against even greater benefits is nothing new, and it remains the sad companion of any peer conflict within the near-deterministic framework of our thought experiment. In near-deterministic AI warfare, loss is not to be minimized but optimized. Losses, even on a staggering scale, are justified if the stakes are commensurately large. The battle of Gettysburg was decisive because of, not in spite of, the staggering number of combatants who died or were incapacitated over three days of battle – between 45,000 to 50,000.[14] Of course, losses, even if monumental, are not always justified by outcomes. In the Battle of the Somme, British troops lost 60,000 combatants in one day, more than the two fighting forces at Gettysburg combined.[15] Yet, compared to the minimal strategic effect, the scale of the loss did not justify the results.[16]

Little Round Top, Sykes Avenue, Gettysburg, PA, USA, July 2020. Photo by Nehemias Mazariegos.

To summarize the point of our thought experiment: warmaking is not a type of police operation, in which, unless absolutely needed, human losses of both the enforcers and those subdued are to be spared. Warmaking is the massive and effective application of human and material sacrifice to obtain the desired result. AI-driven, near-deterministic war will embrace this logic to the bitter end. The only two caveats are that losses unjustified by winnings are to be avoided, which as I will show below, might be an illusion. Only honor charges, pyrrhic victories, and mindless banzai charges are inimical to well-considered warfare because they do not lead to any meaningful result.

At the same time, from a purely ethical perspective, the expectations surrounding AI warfare lead to the conclusion that their near-deterministic capabilities can make difficult decisions defensible. If the prize is great and the outcome worth fighting for, no effort will be spared and no cost too high. If commanders and fighters do not minimize, but optimize the use of force, automated war demands not only optimal, but overwhelming optimal force.

The old, true, and tested truths of war are not mitigated by the emergence of AI command, control, and fighting capabilities. Much utopian wishful thinking insists that, with computerized command and control, modern warfare will become less lethal, shifting from destroying to saving human lives. If history is any proof, however, the smarter the war, the more comprehensively destructive. By kill ratio, Operation Desert Storm exemplifies this fact. While the U.S. and allied troops lost only around 300 combatants, the losses of the Iraqis at the hand of highly automated and computer-directed fire missions were staggering: between 8,000 to 50,000[17] This is a kill ratio that could reach as high as 1 to 100. Imagine a war in which both combatants display the same level of technological sophistication and willingness to inflict pain on each other.

…the law of robotic warfare can be stated as: the more precise the war machines, the more devastating one’s own losses in a peer conflict.

Faster and more efficient battle-winning decisions can be made if decision-making apparatus move from humans to machines’ highly automated intelligence, decision-support, and targeting. Speaking in game theory terms, the situation will result in a perfect prisoner’s dilemma, which might be solved by opposing AIs with the expected logical results: “tit for tat.”[18] Forced to battle each other, while limited by minimum requirements of force conservation, the AIs will inflict harm on each other and on the opposing armies at a pace that will continue without end. This ensures mutual destruction. Only when the opposing forces are uneven will AIs recognize the inevitable effect of Lanchester’s Laws for calculating war conflict outcomes: for most modern warfare that allows all participants to engage each other, the effect of a force on the other increases by the square of own’s force size.[19] But this means that the outcome of most automatic wars could be predicted by the force ratio alone. Given sufficient extra strength, one’s own attrition rate will be sufficiently “reasonable” to pay for the complete destruction of the adversary.[20]

Given this, the law of robotic warfare can be stated as: the more precise the war machines, the more devastating one’s own losses in a peer conflict. On this basis, one might expect fully automated wars to become unacceptable to any great power. Fearing mutually assured destruction by conventional means, U.S., Chinese, or Russian supreme commands might rely more and more on humans assisted by machines, rather than accepting a system of command of machines assisted by men, even if the former were less efficient or effective than the latter.

Warfare on a massive scale is a possibility and preparedness is, at the very least, a sign of wisdom.

However, even if humans remain firmly in the saddle, there will always be the temptation for one side to unleash its own automated weapon systems with minimal human control, even if costs could be high. To meet this challenge, a new Law of Robotic Warfare is proposed, which relies on a new method of training AIs that could be both fast and beholden to humans. This is the Golden Rule, which in this context should be formulated as the right and obligation of any AI to do unto other AIs or humans as it would want them to do unto it.[21] Furthermore, each decision would be given to a federation, rather than one specific AI, so a system of checks-and-balances may be created. Technically, this involves an N-version decision making process to assign each decision to a series of routines. N stands for “a number of,” each independent of each other and each loaded with slightly different information sets and the commandment of self-preservation. An N-version decision making process takes into account not how good or efficient the decision of an AI is, but how likely the AI is to show algorithmic “respect” to other AIs or human rules and operators.

These are, of course, for now mere scenarios born of thought experiment. Furthermore, as argued, the solutions should and could not be merely technical. Deep and considerate analysis of the ethical dimensions of future warfare demands joining the perspective of the technologist with that of the military commander and of the ethicist-philosopher. Finally, conclusions and recommendations should be pragmatic. We do not live in a world of angels. Warfare on a massive scale is a possibility and preparedness is, at the very least, a sign of wisdom. It is important, however, to think not only about war but also about the peace that will come after it, which should be presided by the golden rule.

Sorin Matei holds a Ph.D. in communication technology research from Annenberg School of Communication, and an MA in International Relations with a focus on military affairs from The Fletcher School of Law and Diplomacy, Tufts University. Some of his work was featured in Wired Magazine and Defense News, and he has contributed articles to the National Interest, Washington Post, Foreign Policy, and BBC World Service.

The Strategy Bridge is read, respected, and referenced across the worldwide national security community—in conversation, education, and professional and academic discourse.

Thank you for being a part of The Strategy Bridge community. Together, we can #BuildTheBridge.

Header Image: Galaxy's Edge, Disneyland California, January 2020 (Rod Long).

Notes:

[1] Paul Scharre, Introduction, Army of None: Autonomous Weapons and the Future of War, 1 edition (W. W. Norton & Company, 2018).

[2] Scharre, Army of None.

[3] Anonymous and Future of Life Institute, “Lethal Autonomous Weapons Pledge,” NGO, Future of Life Institute, n.d., https://futureoflife.org/lethal-autonomous-weapons-pledge/.

[4] Asimov, Isaac. I, Robot. Spectra, 2004; Christoph Salge, “Asimov’s Laws Won’t Stop Robots from Harming Humans, So We’ve Developed a Better Solution,” Scientific American: The Conversation (blog), July 11, 2017, https://www.scientificamerican.com/article/asimovs-laws-wont-stop-robots-from-harming-humans-so-weve-developed-a-better-solution/.

[5] Ronald C. Arkin, “Ethical Robots in Warfare,” IEEE Technology and Society Magazine 28, no. 1 (Spring 2009): 30–33, https://doi.org/10.1109/MTS.2009.931858.

[6] Mark Tegmark, “Benefits & Risks of Artificial Intelligence,” Advocacy, Future of Life Institute, 2016, https://futureoflife.org/background/benefits-risks-of-artificial-intelligence/.

[7] Donald Rumsfeld, “Defense.Gov Transcript: DoD News Briefing - Secretary Rumsfeld and Gen. Myers,” Department of Defense, February 12, 2002, http://archive.defense.gov/Transcripts/Transcript.aspx?TranscriptID=2636.

[8] Sorin Adam Matei and Kerk F. Kee, “Computational Communication Research,” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 9, no. 4 (2019), https://doi.org/10.1002/widm.1304.

[9] Greg Allen and Taniel Chan, “Artificial Intelligence and National Security,” Study, Belfer Center Paper (Cambridge, Ma: Belfer Center, Harvard University, 2017), https://www.belfercenter.org/sites/default/files/files/publication/AI%20NatSec%20-%20final.pdf.

[10] John Von Neumann, “Probabilistic Logics and the Synthesis of Reliable Organisms from Unreliable Components." Automata Studies, no. 34 (1956), pp. 43–98

[11] Scharre, Army of None.

[12] Carl von Clausewitz, On War, trans. Rosalie West, Michael Howard, and Peter Paret (Princeton, NJ: Princeton University Press, 1984).

[13] Margarita Konaev, “With AI, We’ll See Faster Fights, but Longer Wars,” National Security, War on the Rocks (blog), October 29, 2019, https://warontherocks.com/2019/10/with-ai-well-see-faster-fights-but-longer-wars/.

[14] John W. Busey and David G. Martin, Regimental Strengths and Losses at Gettysburg (Hightstown, NJ: Longstreet House, 2005), https://catalog.hathitrust.org/Record/008997363.

[15] “First Battle of the Somme: Forces, Outcome, and Casualties,” in Encyclopedia Britannica, July 19, 2019, https://www.britannica.com/event/First-Battle-of-the-Somme.

[16] John Keegan, The First World War (New York: Knopf, 1999).

[17] “Persian Gulf War: Definition, Combatants, and Facts,” in Encyclopedia Britannica, March 7, 2019, https://www.britannica.com/event/Persian-Gulf-War.

[18] Robert Axelrod and Richard Dawkins, The Evolution of Cooperation, Revised edition (New York: Basic Books, 2006).

[19] Lanchester.

[20] J. G. Taylor, “Force-on-Force Attrition Modeling” (Linthicum, Md: Military Applications Society, 1980).

[21] F. W. Lanchester, “Mathematics in Warfare,” in The World of Mathematics, ed. J. R. Newman (New York: Simon and Schuster, 1956); Sorin Adam Matei and Elisa Bertino, “Can N-Version Decision-Making Prevent the Rebirth of HAL 9000 in Military Camo? Using a ‘Golden Rule’ Threshold to Prevent AI Mission Individuation,” in Policy-Based Autonomic Data Governance, ed. Seraphin Calo, Elisa Bertino, and Dinesh Verma, Lecture Notes in Computer Science (Cham: Springer International Publishing, 2019), 69–81, https://doi.org/10.1007/978-3-030-17277-0_4.